ISSN 0253-2778

CN 34-1054/N

From a statistical viewpoint, it is essential to perform statistical inference in federated learning to understand the underlying data distribution. Due to the heterogeneity in the number of local iterations and in local datasets, traditional statistical inference methods are not competent in federated learning. This paper studies how to construct confidence intervals for federated heterogeneous optimization problems. We introduce the rescaled federated averaging estimate and prove the consistency of the estimate. Focusing on confidence interval estimation, we establish the asymptotic normality of the parameter estimate produced by our algorithm and show that the asymptotic covariance is inversely proportional to the client participation rate. We propose an online confidence interval estimation method called separated plug-in via rescaled federated averaging. This method can construct valid confidence intervals online when the number of local iterations is different across clients. Since there are variations in clients and local datasets, the heterogeneity in the number of local iterations is common. Consequently, confidence interval estimation for federated heterogeneous optimization problems is of great significance.

An online confidence interval estimation method called separated plug-in via rescaled federated averaging.

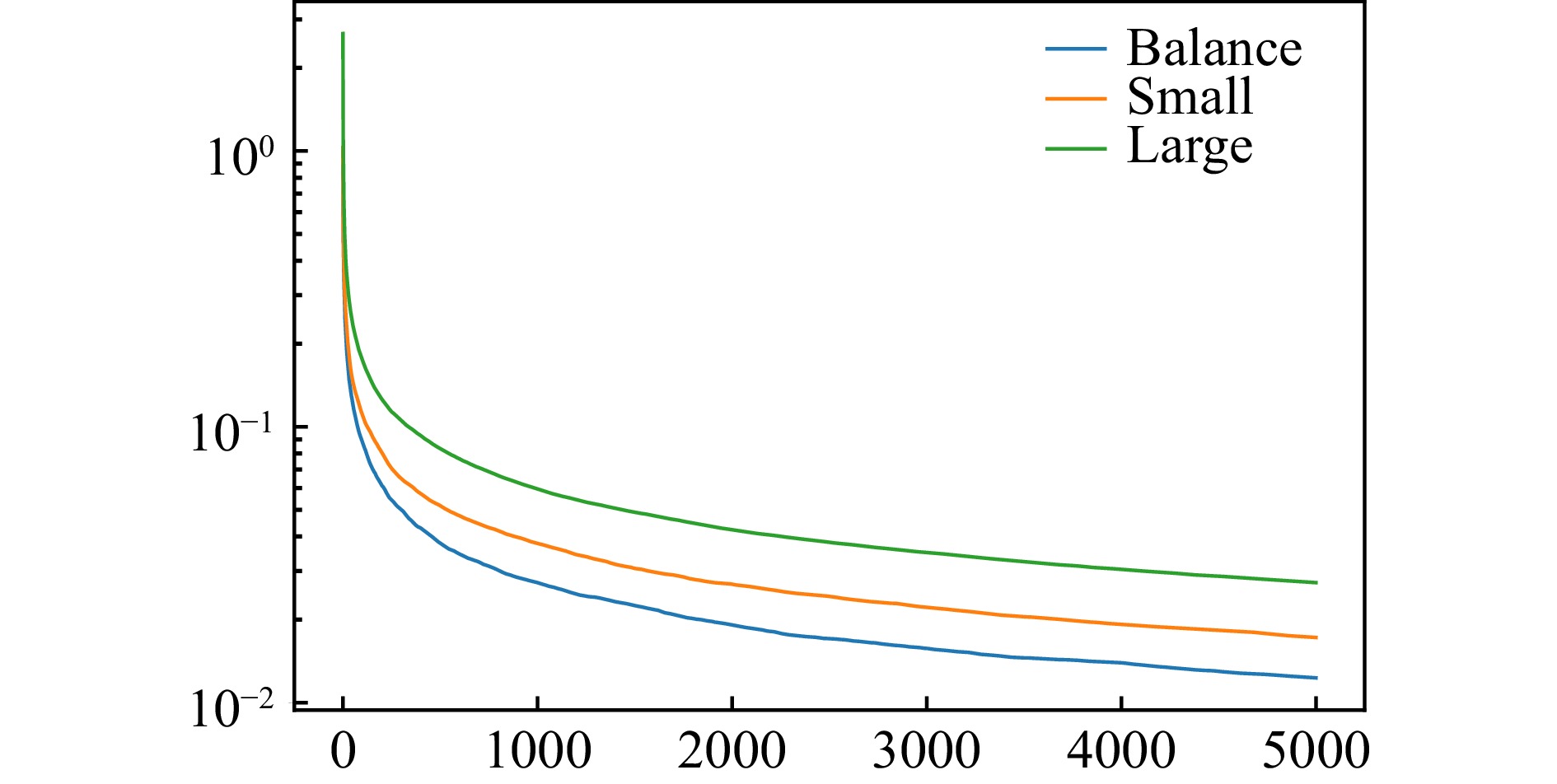

Figure

1.

Impacts of

| [1] |

Hastie T, Friedman J, Tibshirani R. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. New York: Springer, 2001.

|

| [2] |

Berk R A. Statistical Learning from a Regression Perspective. New York: Springer, 2008.

|

| [3] |

James G, Witten D, Hastie T, et al. An Introduction to Statistical Learning: With Applications in R. New York: Springer, 2013.

|

| [4] |

Li T, Sahu A K, Talwalkar A, et al. Federated learning: Challenges, methods, and future directions. IEEE Signal Processing Magazine, 2020, 37 (3): 50–60. DOI: 10.1109/MSP.2020.2975749

|

| [5] |

McMahan B, Moore E, Ramage D, et al. Communication-efficient learning of deep networks from decentralized data. In: Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS) 2017. Fort Lauderdale, FL: PMLR, 2017: 1273–1282.

|

| [6] |

Preuveneers D, Rimmer V, Tsingenopoulos I, et al. Chained anomaly detection models for federated learning: An intrusion detection case study. Applied Sciences, 2018, 8 (12): 2663. DOI: 10.3390/app8122663

|

| [7] |

Mothukuri V, Khare P, Parizi R M, et al. Federated-learning-based anomaly detection for IoT security attacks. IEEE Internet of Things Journal, 2021, 9 (4): 2545–2554. DOI: 10.1109/JIOT.2021.3077803

|

| [8] |

Amiri M M, Gündüz D. Federated learning over wireless fading channels. IEEE Transactions on Wireless Communications, 2020, 19: 3546–3557. DOI: 10.1109/TWC.2020.2974748

|

| [9] |

Bharadhwaj H. Meta-learning for user cold-start recommendation. In: 2019 International Joint Conference on Neural Networks (IJCNN). Budapest, Hungary: IEEE, 2019: 1–8.

|

| [10] |

Chen S, Xue D, Chuai G, et al. FL-QSAR: A federated learning-based QSAR prototype for collaborative drug discovery. Bioinformatics, 2021, 36: 5492–5498. DOI: 10.1093/bioinformatics/btaa1006

|

| [11] |

Tarcar A K. Advancing healthcare solutions with federated learning. In: Federated Learning. Cham, Switzerland: Springer, 2022: 499–508.

|

| [12] |

Zhao Y, Li M, Lai L, et al. Federated learning with non-IID data. arXiv: 1806.00582, 2018.

|

| [13] |

Zhang W, Wang X, Zhou P, et al. Client selection for federated learning with non-IID data in mobile edge computing. IEEE Access, 2021, 9: 24462–24474. DOI: 10.1109/ACCESS.2021.3056919

|

| [14] |

Briggs C, Fan Z, Andras P. Federated learning with hierarchical clustering of local updates to improve training on non-IID data. In: 2020 International Joint Conference on Neural Networks (IJCNN). Glasgow, UK: IEEE, 2020: 1–9.

|

| [15] |

Li X, Huang K, Yang W, et al. On the convergence of FedAvg on non-IID data. In: 2020 International Conference on Learning Representations. Appleton, WI: ICLR, 2020.

|

| [16] |

Stich S U. Local SGD converges fast and communicates little. arXiv: 1805.09767, 2018.

|

| [17] |

Zhou F, Cong G. On the convergence properties of a K-step averaging stochastic gradient descent algorithm for nonconvex optimization. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence. Palo Alto, CA: AAAI Press, 2018: 3219–3227.

|

| [18] |

Wang S, Tuor T, Salonidis T, et al. Adaptive federated learning in resource constrained edge computing systems. IEEE Journal on Selected Areas in Communications, 2019, 37 (6): 1205–1221. DOI: 10.1109/JSAC.2019.2904348

|

| [19] |

Yu H, Jin R, Yang S. On the linear speedup analysis of communication efficient momentum SGD for distributed non-convex optimization. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, CA: PMLR, 2019: 7184–7193.

|

| [20] |

Wang J, Liu Q, Liang H, et al. Tackling the objective inconsistency problem in heterogeneous federated optimization. In: Advances in Neural Information Processing Systems 33 (NeurIPS 2020). Red Hook, NY: Curran Associates, Inc., 2020, 33: 7611–7623.

|

| [21] |

Li T, Sahu A K, Zaheer M, et al. Federated optimization in heterogeneous networks. In: Proceedings of Machine Learning and Systems 2020 (MLSys 2020). Austin, TX: mlsys.org, 2020, 2: 429–450.

|

| [22] |

Karimireddy S P, Kale S, Mohri M, et al. SCAFFOLD: Stochastic controlled averaging for federated learning. In: Proceedings of the 37th International Conference on Machine Learning. Online: PMLR, 2020: 5132–5143.

|

| [23] |

Liang X, Shen S, Liu J, et al. Variance reduced local SGD with lower communication complexity. arXiv: 1912.12844, 2019.

|

| [24] |

Ruppert D. Efficient estimations from a slowly convergent Robbins–Monro process. Ithaca, New York: Cornell University, 1988.

|

| [25] |

Polyak B T, Juditsky A B. Acceleration of stochastic approximation by averaging. SIAM Journal on Control and Optimization, 1992, 30 (4): 838–855. DOI: 10.1137/0330046

|

| [26] |

Zhu W, Chen X, Wu W. Online covariance matrix estimation in stochastic gradient descent. Journal of the American Statistical Association, 2021, 118: 393–404. DOI: 10.1080/01621459.2021.1933498

|

| [27] |

Fang Y, Xu J, Yang L. Online bootstrap confidence intervals for the stochastic gradient descent estimator. The Journal of Machine Learning Research, 2018, 19 (1): 3053–3073. DOI: 10.5555/3291125.3309640

|

| [28] |

Li X, Liang J, Chang X, et al. Statistical estimation and online inference via local SGD. In: Proceedings of Thirty Fifth Conference on Learning Theory. London: PMLR, 2022: 1613–1661.

|

| [29] |

Su W J, Zhu Y. Uncertainty quantification for online learning and stochastic approximation via hierarchical incremental gradient descent. arXiv: 1802.04876, 2018.

|

| [30] |

Reisizadeh A, Mokhtari A, Hassani H, et al. FedPAQ: A communication-efficient federated learning method with periodic averaging and quantization. In: Proceedings of the 23rd International Conference on Artificial Intelligence and Statistics (AISTATS) 2020. Palermo, Italy: PMLR, 2020, 108: 2021–2031.

|

| [31] |

Khaled A, Mishchenko K, Richtárik P. First analysis of local GD on heterogeneous data. arXiv: 1909.04715, 2019.

|

| [32] |

Nesterov Y. Introductory Lectures on Convex Optimization: A Basic Course. New York: Springer, 2003.

|

| [33] |

Toulis P, Airoldi E M. Asymptotic and finite-sample properties of estimators based on stochastic gradients. The Annals of Statistics, 2017, 45 (4): 1694–1727. DOI: 10.1214/16-AOS1506

|

| [34] |

Chen X, Lee J D, Tong X T, et al. Statistical inference for model parameters in stochastic gradient descent. The Annals of Statistics, 2020, 48 (1): 251–273. DOI: 10.1214/18-AOS1801

|

| [35] |

Liu R, Yuan M, Shang Z. Online statistical inference for parameters estimation with linear-equality constraints. Journal of Multivariate Analysis, 2022, 191: 105017. DOI: 10.1016/j.jmva.2022.105017

|

| [36] |

Lee S, Liao Y, Seo M H, et al. Fast and robust online inference with stochastic gradient descent via random scaling. Proceedings of the AAAI Conference on Artificial Intelligence, 2022, 36 (7): 7381–7389. DOI: 10.1609/aaai.v36i7.20701

|

| [37] |

Salam A, El Hibaoui A. Comparison of machine learning algorithms for the power consumption prediction: Case study of Tetouan city. In: 2018 6th International Renewable and Sustainable Energy Conference (IRSEC). Rabat, Morocco: IEEE, 2018.

|

| [38] |

Hall P, Heyde C C. Martingale Limit Theory and Its Application. New York: Academic Press, 2014.

|

| Items | \nu | T=1000 | T=2000 | T=3000 | T=4000 | T=5000 |

| 0.2 | 0.934 | 0.942 | 0.950 | 0.949 | 0.952 | |

| Coverage | 0.3 | 0.958 | 0.944 | 0.953 | 0.955 | 0.952 |

| rate | 0.4 | 0.948 | 0.934 | 0.943 | 0.957 | 0.949 |

| 0.5 | 0.953 | 0.956 | 0.951 | 0.949 | 0.951 | |

| 0.2 | 10.830 (0.616) | 7.587 (0.297) | 6.116 (0.196) | 5.325 (0.149) | 4.757 (0.120) | |

| Average | 0.3 | 8.725 (0.377) | 6.140 (0.193) | 5.004 (0.131) | 4.328 (0.097) | 3.867 (0.077) |

| radius | 0.4 | 7.532 (0.298) | 5.299 (0.148) | 4.319 (0.098) | 3.736 (0.072) | 3.340 (0.058) |

| ( \times 10^{-2} ) | 0.5 | 6.712 (0.223) | 4.733 (0.114) | 3.860 (0.075) | 3.339 (0.057) | 2.985 (0.045) |

| Items | \nu | T=1000 | T=2000 | T=3000 | T=4000 | T=5000 |

| 0.2 | 0.955 | 0.937 | 0.934 | 0.942 | 0.952 | |

| Coverage | 0.3 | 0.937 | 0.948 | 0.944 | 0.944 | 0.944 |

| rate | 0.4 | 0.938 | 0.948 | 0.948 | 0.951 | 0.944 |

| 0.5 | 0.940 | 0.935 | 0.946 | 0.952 | 0.955 | |

| 0.2 | 16.430 (8.498) | 10.960 (4.857) | 8.749 (3.605) | 7.507 (3.157) | 6.536 (2.734) | |

| Average | 0.3 | 11.790 (5.347) | 8.297 (3.604) | 6.760 (2.816) | 5.754 (2.384) | 5.145 (2.193) |

| radius | 0.4 | 9.865 (4.311) | 6.866 (2.904) | 5.678 (2.228) | 4.874 (2.051) | 4.318 (1.797) |

| ( \times 10^{-2} ) | 0.5 | 8.665 (3.556) | 6.056 (2.538) | 4.980 (1.982) | 4.313 (1.739) | 3.851 (1.503) |

| Items | \nu | T=1000 | T=2000 | T=3000 | T=4000 | T=5000 |

| 0.2 | 0.944 | 0.949 | 0.939 | 0.955 | 0.946 | |

| Coverage | 0.3 | 0.938 | 0.942 | 0.945 | 0.945 | 0.958 |

| rate | 0.4 | 0.941 | 0.942 | 0.952 | 0.955 | 0.953 |

| 0.5 | 0.956 | 0.944 | 0.954 | 0.944 | 0.956 | |

| 0.2 | 8.248 (0.287) | 5.773 (0.135) | 4.690 (0.088) | 4.051 (0.064) | 3.616 (0.051) | |

| Average | 0.3 | 6.651 (0.179) | 4.668 (0.088) | 3.800 (0.059) | 3.286 (0.043) | 2.935 (0.035) |

| radius | 0.4 | 5.718 (0.135) | 4.027 (0.064) | 3.280 (0.044) | 2.837 (0.033) | 2.536 (0.026) |

| ( \times 10^{-2} ) | 0.5 | 5.087 (0.109) | 3.587 (0.051) | 2.924 (0.034) | 2.531 (0.026) | 2.009 (0.020) |

| Items | \nu | T=1000 | T=2000 | T=3000 | T=4000 | T=5000 |

| 0.2 | 0.941 | 0.944 | 0.938 | 0.953 | 0.951 | |

| Coverage | 0.3 | 0.942 | 0.948 | 0.947 | 0.945 | 0.951 |

| rate | 0.4 | 0.953 | 0.958 | 0.949 | 0.952 | 0.952 |

| 0.5 | 0.945 | 0.944 | 0.944 | 0.947 | 0.948 | |

| 0.2 | 9.904 (4.834) | 7.090 (3.315) | 5.876 (2.759) | 5.099 (2.364) | 4.563 (2.075) | |

| Average | 0.3 | 7.819 (3.713) | 5.868 (2.770) | 4.836 (2.220) | 4.170 (1.875) | 3.691 (1.619) |

| radius | 0.4 | 6.924 (3.392) | 5.003 (2.282) | 4.104 (1.805) | 3.551 (1.502) | 3.232 (1.406) |

| ( \times 10^{-2} ) | 0.5 | 5.993 (2.809) | 4.213 (1.889) | 3.506 (1.556) | 3.139 (1.405) | 2.852 (1.269) |

| Variable | Estimate | Sepa. CI | Rand. CI |

| Temperature | 0.4807 | (0.4657, 0.4957) | (0.3283, 0.6331) |

| Humidity | 0.0440 | (0.0312, 0.0568) | (0.0307, 0.0573) |

| Wind speed | 0.0547 | (0.0410, 0.0684) | (0.0264, 0.0830) |

| General diffuse flows | -0.0360 | (-0.0493, -0.0227) | (-0.1320, 0.0600) |

| Diffuse flows | -0.1005 | (-0.1125, -0.0885) | (-0.1867, -0.0143) |

| Variable | Estimate | Sepa. CI | Rand. CI |

| B | 0.8758 | (0.7087, 1.0429) | (0.2439, 1.5077) |

| G | -0.3485 | (-0.5160, -0.1810) | (-1.1258, 0.4288) |

| R | -0.3864 | (-0.4922, -0.2806) | (-1.1616, 0.3888) |